Generative AI & Ad Quality

With the rapid scalability of AI-generated content, fraudulent ads are flooding the digital space, posing a challenge for

ad-supported media in maintaining a trustworthy environment.

When generative AI is leveraged by threat actors it can be implemented to churn out global clickbait campaigns that prey on publishers audiences at scale. Fraudsters increasingly leverage AI to create convincing fake narratives, manipulate images, and simulate videos, giving their schemes a deceptive sheen of legitimacy. This misuse of technology lures users into scams and undermines the integrity of the entire advertising ecosystem.

AI generated creatives extend beyond just a few rogue ads; there’s an impending threat of deceptive content overwhelming legitimate programmatic channels.

The intensifying use of AI for malicious intent and its expected widespread adoption in 2024 underscore the urgency of ad quality safeguards. As we delve into the threats that publishers face, it becomes crucial for digital media professionals to understand and counteract these risks effectively.

How Generative AI is Leveraged for Clickbait Advertising

Undermining Consumer Trust with Misleading Product Offers

Generative AI’s capacity to create lifelike product visuals and compelling copy is increasingly exploited to market counterfeit or non-existent products, making even the most implausible offers appear enticing. This emphasizes the necessity of monitoring all inventory for clickbait creative to ensure the authenticity of product advertising on your sites/platforms.

Misleading products lure users with clickbait ads for nonexistent or counterfeit products, preying on users’ hopes and fears.

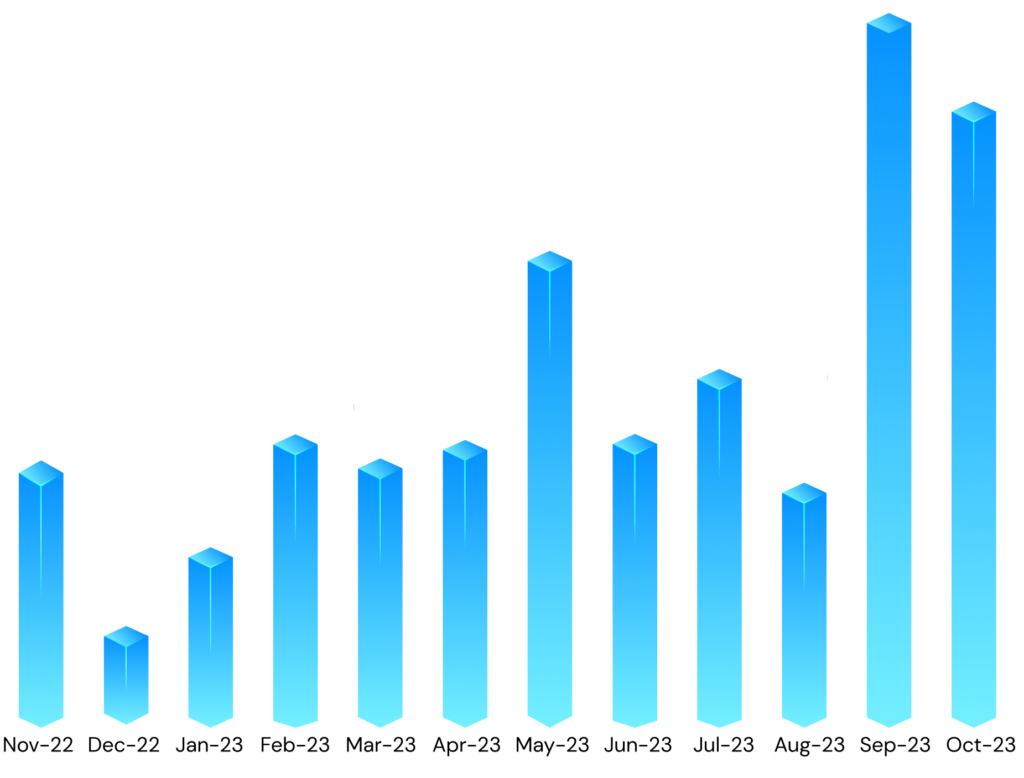

Q4’s Alarming Trend in False Advertising

Since early 2023, misleading product offers have sharply risen, peaking notably in the fourth quarter. Complete visibility over programmatic inventory and ad security protection are required to ensure users are not duped by malware. Failure to implement clickbait protections risks harming audiences and can erode trust in your site.

Misleading Product Offers 2023

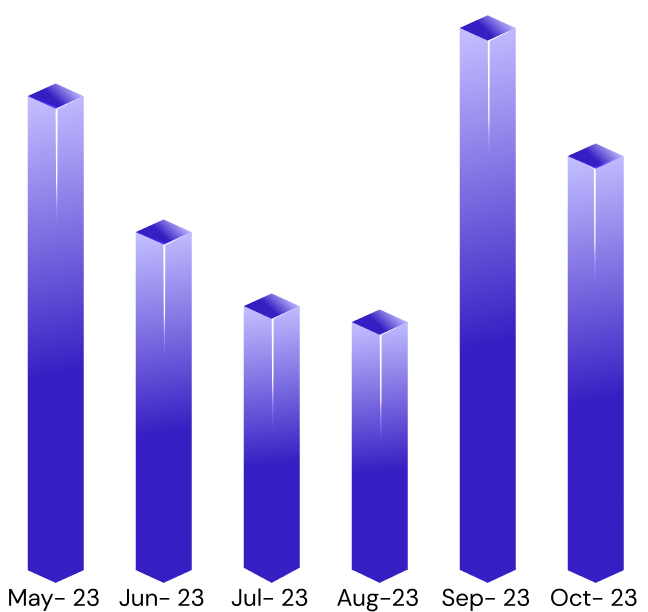

Fuelling a Surge in Financial Scams

Financial scams misuse recognized celebrities and shocking headlines to lure users into clicking on ads. These ads redirect them to deceptive landing pages promoting fake financial services or financial instruments.

Generative AI threatens digital publishing, with fraudsters crafting persuasive fake endorsements and ‘proven’ lucrative returns to entice victims into malicious investment opportunities. GeoEdge’s Security Research team indicates that, unlike other types of cybercrime, financial scams drive people to pay the scammer directly, rather than resorting to roundabout tactics like installing ransomware or selling personal data to another company.

Financial Ad Scams In H2 2023

Today’s fraudsters invest significant efforts to conceal their ploys in a cloak of legitimacy. They replicate news websites from various geo-targeted regions on their landing pages, and set up fake websites for the ‘investment companies’ promoted in clickbait advertisements. Some of these fraudsters fabricate LinkedIn profiles for the supposed ‘executives’ displayed in their About Us sections.

Fraudsters craft counterfeit news portals featuring ‘testimonials’ from individuals who supposedly achieved remarkable success. These scammers even initiate Google Ads search campaigns targeting wary individuals who search, ‘Is XYZ a genuine investment operation?’ Cybercriminals go to these lengths to convince potential victims that their fraudulent offer is legitimate, enticing them to invest in their malicious investment opportunities or services.

Shaping Political Narratives: Influencing State and Local Elections

Generative AI heightens the stakes in political advertising. Deepfake technology can synthesize videos of public figures making fictitious statements, which could unduly influence public opinion. The distortion of political ads holds dire implications for the democratic process, as well as the public’s confidence in publisher integrity.

Digital media professionals must serve as guardians of ad content, reviewing nd verifying all political advertising. Identifying AI-generated content is critical to curtailing the spread of political disinformation.

Publishers Can Regain Control Over Generative AI Risks by:

-

- Establishing a proactive policy for monitoring harmful messaging and provocative visuals in both ads and landing pages.

-

- Identifying and blocking advertisers that seek to provoke fear and shock.

- Empowering readers to flag offensive ads directly from the ad slot. This allows ads to be reviewed quickly, with any problematic ads referred to the ad ops team for immediate action.